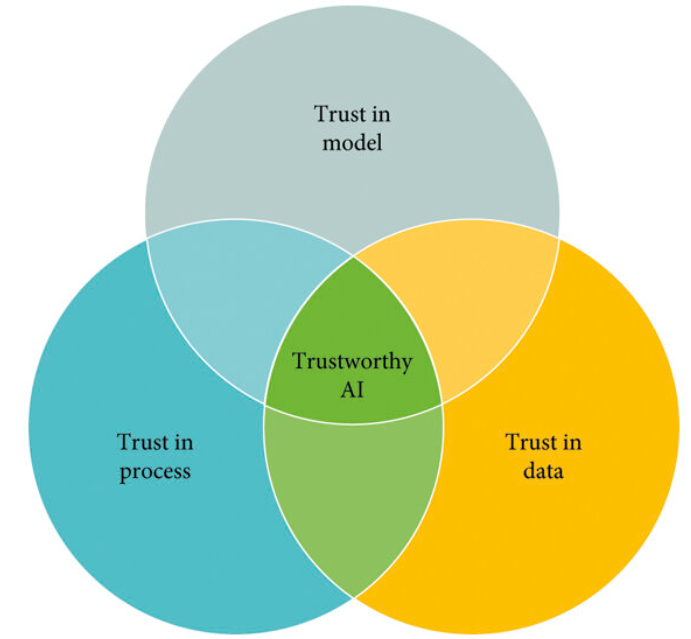

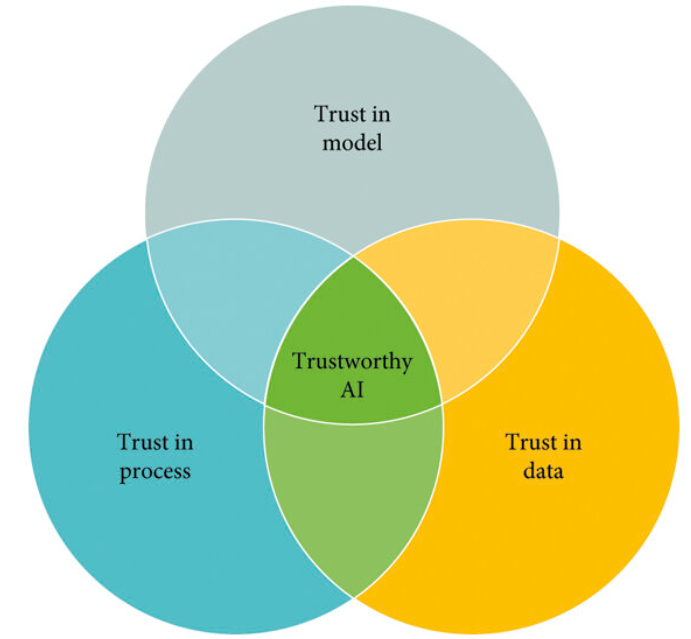

AI is ruling the tech world and the future of AI adoption across industry verticals looks closer than ever before. Though these advancements are reaching new highs, the harm it might cause is also critical and extremely daunting. AI will reach its full potential when trust can be established in each stage of its lifecycle, from design to development, deployment and use. Trustworthy AI is a term used to describe AI that is lawful, ethically adherent, and technically robust.

AI models and their decisions are difficult to understand – even by experts. The stakeholders involved in an AI system’s lifecycle should be able to understand why AI arrived at a decision and at which point it could have been different. Explainable AI (XAI) offers new methods to expose the important features used in the model that contribute to the high-risk score. According to Gartner, by 2025, 30 percent of government and large enterprise contracts for the purchase of AI products and services will require the use of AI that is explainable and ethical.

An AI system is deemed trustworthy if the listed components are implemented and achieved at each stage of its lifecycle, from design to development, deployment and use.

The data, systems and business models related to AI should be transparent. Humans should be aware when they are interacting with an AI system.

AI models need to take into account the whole system lifecycle, from training to production which means personal data initially provided by the user, as well as the information generated about the user over the course of their interaction with the system.

Explanations are necessary to enhance understanding and allow all involved stakeholders to make informed decisions.

Ensuring that AI systems are fair and include proper safeguards against bias and discrimination will lead to more equal treatment of all users and stakeholders.

AI systems must be accurate, able to handle exceptions, perform well over time and be reproducible.

Today, AI can generate new content, such as text, images, audio, and code. It does this by learning the patterns and structures of existing data and then using that knowledge to create new data that is similar to the data it was trained on. Achieving Trustworthy AI with the essential characteristics involves imbibing the mentioned steps:

Awareness and engagement between AI model and policymakers, researchers, and those implementing AI-enabled systems regarding the system, data and explanations will be necessary to create a trustworthy AI environment.

Existing policies should be adapted to reflect the potential impact of AI in the business and its users. The development of new compliance policies should include both technical mitigation measures and human oversight.

How objectives for the system are set, how the model is trained, what privacy and security safeguards are needed, what big data are used, and what the implications are for the end user and society should be considered.

Relevant risks can be challenging to identify for any AI system. The European Union’s Ethics Guidelines for Trustworthy AI provide an assessment list to help companies define risks associated with AI.

Companies are accountable for the development, deployment and usage of AI technologies and systems. There should be a commitment from all parties involved to ensure that the system is trustworthy and in compliance with current laws and regulations.

Generative AI has the potential to revolutionize many industries and transform our lives in many ways but also poses various societal risks like introducing bias, preventable errors, poor decision-making, misinformation and manipulation, potentially threatening democracies and undermining social trust through deep fake and online bots. A recent global report found a 10x increase in the number of deepfakes detected globally across all industries from 2022 to 2023.

To tackle the risks posed by AI, various attempts covering ethics, morals and legal values in the development and deployment of AI are being made at the national and international levels.

At the intergovernmental level, OECD’s Recommendation on Artificial Intelligence was the first such initiative in 2019, which listed the following 10 principles to make AI trustworthy by developing and deploying AI systems in a way that contributes to inclusive growth and sustainable development, benefits people and the planet, human-centred, transparent, accountable, robust, secure, safe, ethical, fair, and beneficial to society. Since May 2019, these principles have been adopted by 46 countries and endorsed by the G20.

Trustworthiness characteristics are inextricably tied to social and organizational behavior, the datasets used by AI systems, selection of AI models and algorithms and the decisions made by those who build them, and the interactions with the humans who provide insight from and oversight of such systems. A comprehensive approach to risk management, calls for balancing tradeoffs among the trustworthiness characteristics. The decision to commission or deploy an AI system should be based on a contextual assessment of trustworthiness characteristics and the relative risks, impacts, costs, and benefits, and informed by a broad set of interested parties.

At the micro level, AI affects individuals in everything from landing a job to retirement planning, securing home loans, job and driver safety, health diagnoses and treatment coverage, arrests and police treatment, political propaganda and fake news, conspiracy theories, and even our children’s mental health and online safety.

By embracing responsible AI development and deployment practices, we can harness the power of AI to address global challenges and improve the lives of people worldwide. We must also remain vigilant in mitigating the potential risks posed by AI, such as bias, discrimination, and misuse, and sensitize people to identify misinformation. Only through a collaborative effort among governments, businesses, academia, and civil society can we ensure that Trustworthy AI is developed and used responsibly for the benefit of all.